Problem

AI researchers spend days writing custom scripts in Jupyter notebooks to benchmark image models. The process is manual and time-intensive, making experiments slow to reproduce and hard to compare across models. In the scientific community, reproducibility is critical, but image generation's randomness and lack of standardized tooling make this nearly impossible without significant manual effort.

Solution

Research teams can now benchmark 100s of prompts across models in hours and publish auditable leaderboards.

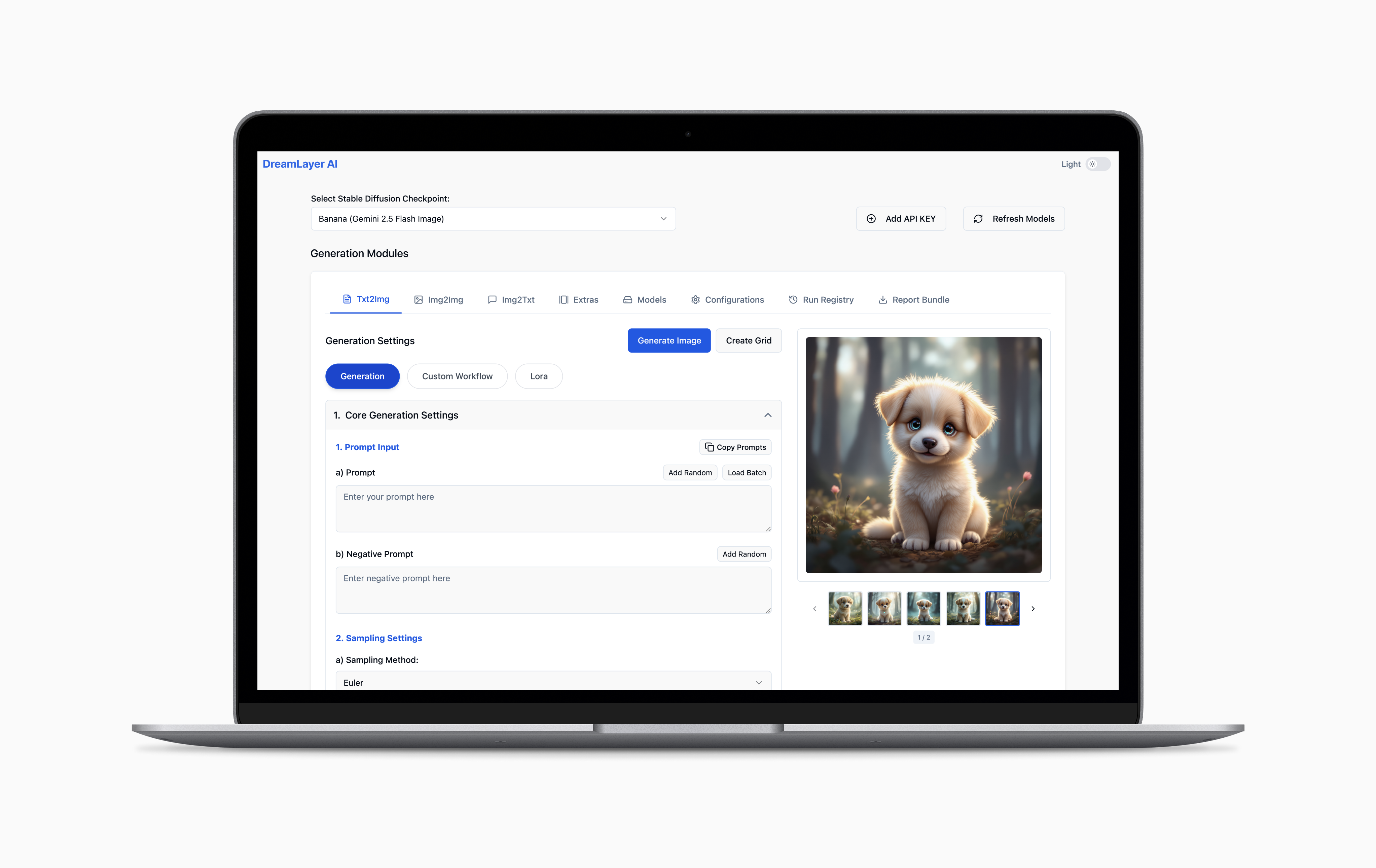

DreamLayer automates reproducible diffusion benchmarking by standardizing prompts, seeds, configurations, and metric evaluation across models. It replaces one-off Jupyter notebook experiments with a consistent, end-to-end evaluation pipeline.

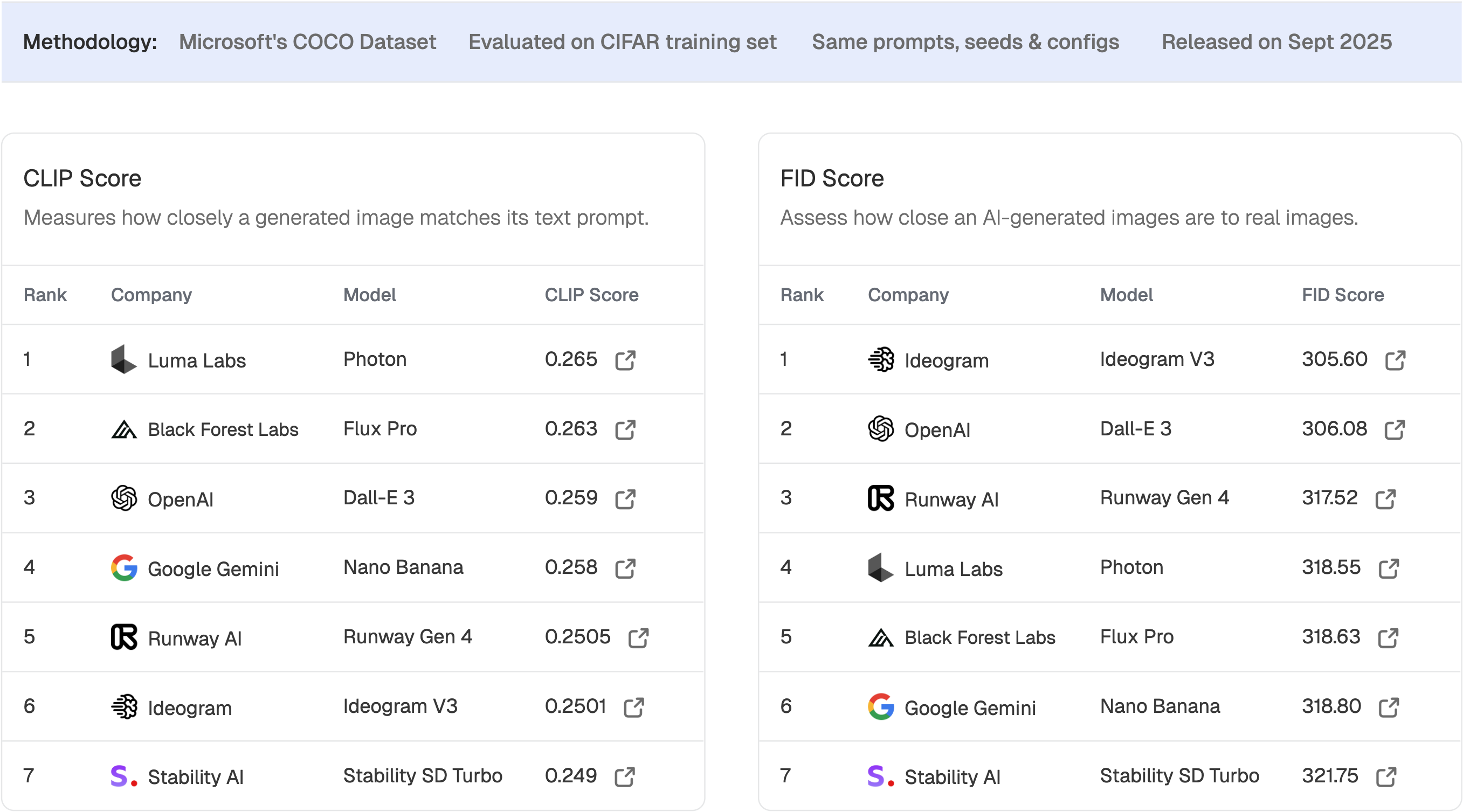

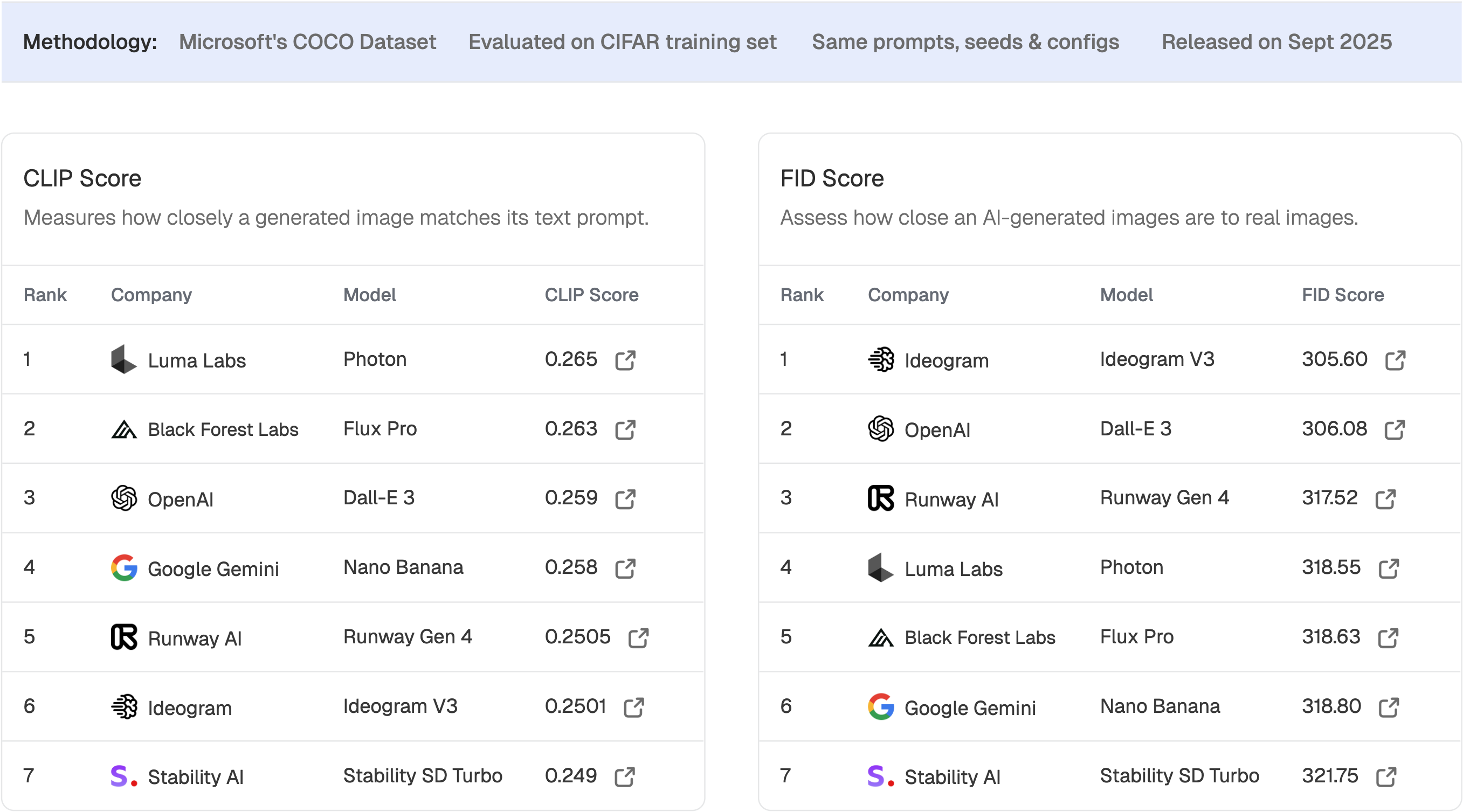

In 2 days, I benchmarked 7 models across 200 prompts and 5 metrics (CLIP Score, FID, F1, Precision, and Recall). Without DreamLayer, this would have taken 1-2 weeks.

Full Leaderboard

Full LeaderboardLearnings

1. Designing for Open-Source

- In open-source, the goal is to enable a developer community to ship features quickly. In addition to look and feel, I prioritized developer experience, because as people contribute, the product can quickly become hard to use.

- Being intentional about component library was critical. I chose Tailwind CSS with the Untitled UI kit for clean Figma-to-React translation and minimal styling overhead, preventing UI lag during long image generations. This had a big impact on the project’s velocity and maintainability.

2. Shipping Front-End as a Designer

- I partnered with a back-end engineer and built the first version in Lovable, then switched to Cursor when it got connected to the back-end. The most rewarding part was seeing engineers contribute to code I initially built. My biggest takeaway is designers can ship front-end directly with these tools.

3. Simplifying Complex AI Tools

- Tools like AUTOMATIC1111 and ComfyUI can feel dense and intimidating, with heavy ML jargon. As an early adopter, I understood how that complexity accumulated over time.

- My process started with UX writing. I created a naming system to replace the mix of ML, engineering, and Photoshop terms. Then, I audited the information architecture of adjacent tools to understand their mental models and used those insights to redesign the UI with a clearer structure and language.

Full Leaderboard

Full Leaderboard