While building Node App, most brands used influencers to create the visuals for their ads, websites, and social media feeds. When I started experimenting with Stable Diffusion in October 2022, it opened up a wave of ideas for how we could use it to improve brand experiences.

The more I explored, the clearer it became that the opportunity was much bigger than just replacing creators. But my first instinct was simple: what if brands could generate visuals without needing influencers at all?

Core Problem

Brand and influencer collaborations at scale are slow and unreliable.

User Problem

E-commerce brands wait 15 days from launching a campaign to getting content.

Even then, they risk influencers posting late, off brief, or not at all.

Business Problem

20% of collaborations end with influencers not posting.

90% of churn is tied to compliance issues. Missing posts directly hurt LTV.

Opportunity:

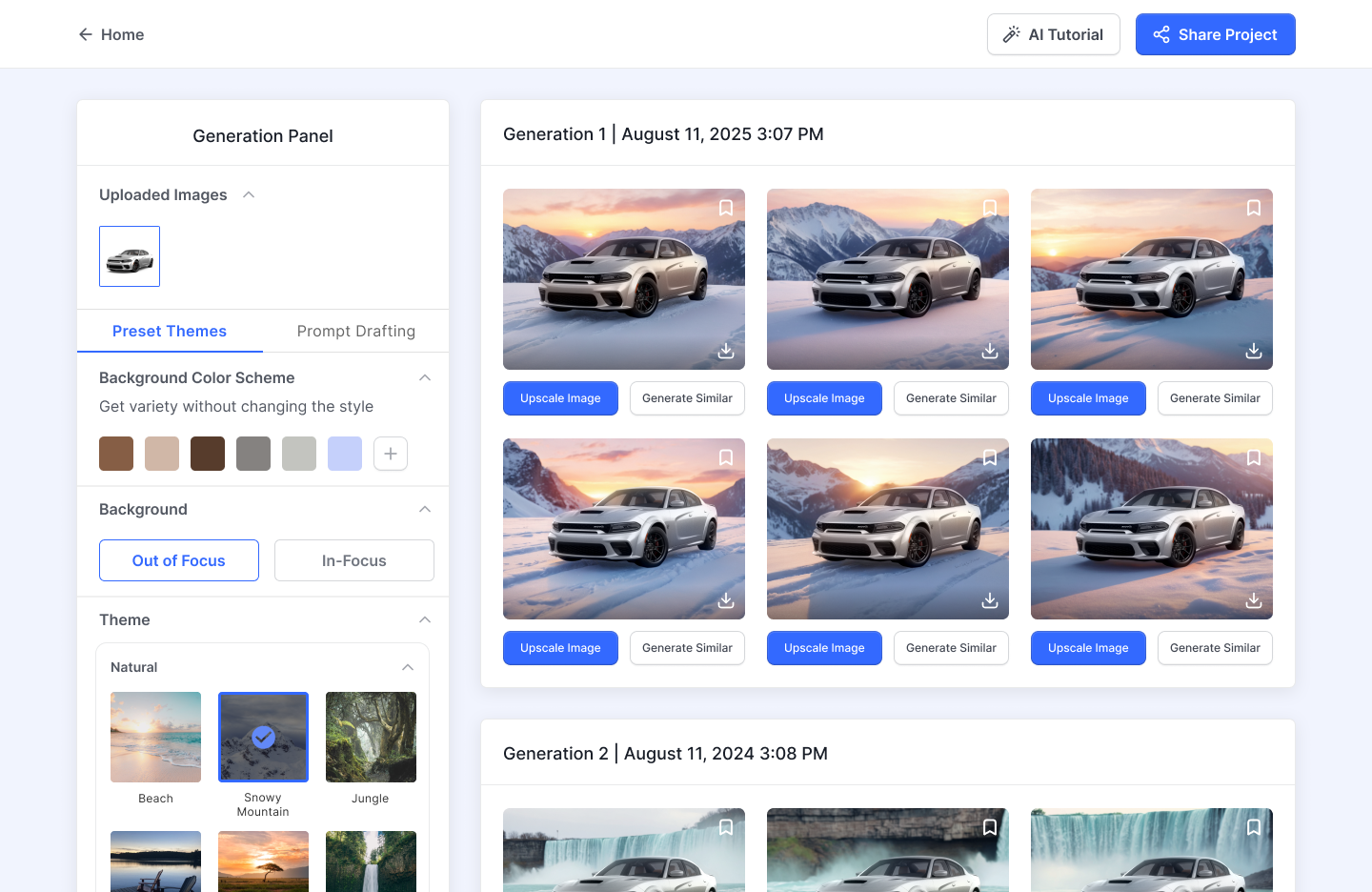

Stable Diffusion could reduce the slow and unreliable parts of content creation. In 2022, the tooling had a steep learning curve and was built for technical users, not marketers.

Hypothesis:

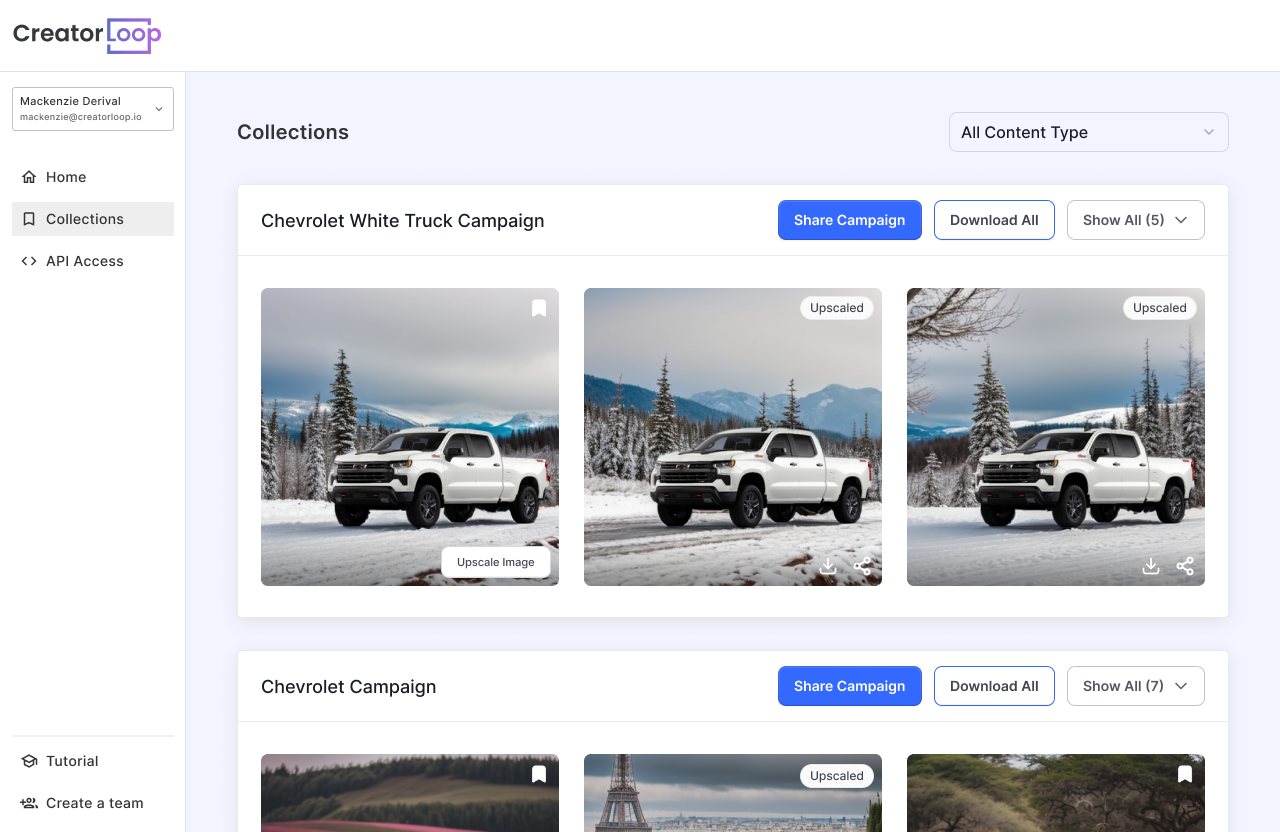

If AI image tools become usable for marketers, brands will shift budget from hiring influencers for product visuals to generate social content in-house.

Market Research

Competitive Analysis

I tested 25 AI image tools and mapped the landscape to understand how product positioning. This help me separate what they claimed to solve from what they actually enabled users to do.

Here are a few products I tested:

Interview

After interviewing 30 marketing managers from companies like McCann Worldgroup, Jarritos, and Hershey, we learned:

- Agencies like the idea of using AI for ideation

- Brands were excited about the opportunity to create content on-demand

- A lot of skepticism about what AI can do

- Very few had experimented with other GenAI tools

- Large organizations don’t know where they’d fit it in their budget

Takeaway From Research

1. Misalignment between user workflows and the design of those tools.

Brands and agencies create content differently. Brands build brand libraries and posting schedules. Agencies do heavy ideation and operate with more rigid processes. Most AI image tools did not match either workflow well, which made them hard to adopt beyond quick experiments.

2. Most tools converged on the same obvious use case

Across the products I tested, the default wedge was product shots for e-commerce brands, fashion, or consumer image generation apps. The space felt crowded, and many tools looked similar in positioning. At best, they could be used by brands to place products on different settings.

3. The larger opportunity was a non-obvious B2B vertical

From working with e-commerce brands, I was skeptical of the product shot market because visuals are relatively cheap when working with influencers. My instinct was that a larger B2B vertical needed this technology more.

An agency owner summarized it as: think about industries with heavy products, like industrial machines.

Product Strategy

The goal was to leverage Node’s existing distribution to drive early adoption. Early users would help us improve the system quickly and uncover the most promising B2B vertical to pursue.